Cross-Validation in Neuroimaging ML: A Complete Guide to Protocols, Pitfalls, and Best Practices for Research

This comprehensive guide examines cross-validation (CV) protocols for neuroimaging machine learning, addressing critical challenges like data leakage, site/scanner bias, and small sample sizes.

Cross-Validation in Neuroimaging ML: A Complete Guide to Protocols, Pitfalls, and Best Practices for Research

Abstract

This comprehensive guide examines cross-validation (CV) protocols for neuroimaging machine learning, addressing critical challenges like data leakage, site/scanner bias, and small sample sizes. We explore foundational concepts, detail methodological implementations (including nested CV and cross-validation across sites), provide troubleshooting strategies for overfitting and bias, and compare validation frameworks for optimal generalizability. Designed for researchers, scientists, and drug development professionals, this article synthesizes current best practices to ensure robust, reproducible, and clinically meaningful predictive models in biomedical research.

The Why and What: Foundational Principles of CV for Neuroimaging Data

Standard machine learning (ML) validation, primarily k-fold cross-validation (CV), assumes that data samples are independent and identically distributed (i.i.d.). Neuroimaging data from modalities like fMRI, sMRI, and DTI intrinsically violate this assumption due to complex, structured dependencies originating from scanning sessions, within-subject correlations, and site/scanner effects. Applying standard CV leads to data leakage and overly optimistic performance estimates, compromising the validity and generalizability of models for biomarker discovery and clinical translation.

Table 1: Common Pitfalls and Their Impact on Model Performance

| Pitfall | Description | Typical Performance Inflation (Reported Range) |

|---|---|---|

| Non-Independence | Splitting folds without respecting subject boundaries, allowing data from the same subject in both train and test sets. | Accuracy inflation: 10-40 percentage points. AUC can rise from chance (~0.5) to >0.8. |

| Site/Scanner Effects | Training on data from one scanner/site and testing on another without proper harmonization, or leaking site information across folds. | Performance drops of 15-30% accuracy when tested on a new site versus internal CV. |

| Spatial Autocorrelation | Voxel- or vertex-level features are not independent; nearby features are highly correlated. | Leads to spuriously high feature importance and unreliable brain maps. |

| Temporal Autocorrelation (fMRI) | Sequential time points within a run or session are highly correlated. | Inflates test-retest reliability estimates and classification accuracy in task-based paradigms. |

| Confounding Variables | Age, sex, or motion covariates correlated with both the label and imaging features can be learned as shortcut signals. | Can produce significant classification (e.g., AUC >0.7) for a disease label using only healthy controls from different age groups. |

Table 2: Comparison of Validation Protocols

| Validation Protocol | Procedure | Appropriateness for Neuroimaging | Key Limitation |

|---|---|---|---|

| Standard k-Fold CV | Random partition of all samples into k folds. | Fails. Severely breaches independence. | Grossly optimistic results. |

| Subject-Level (Leave-Subject-Out) CV | All data from one subject (or N subjects) held out as test set per fold. | Essential baseline. Preserves subject independence. | Can be computationally expensive; may have high variance. |

| Group-Level (Leave-Group-Out) CV | All data from a specific group (e.g., all subjects from Site 2) held out per fold. | Critical for generalizability testing. Tests robustness to site/scanner. | Requires multi-site/cohort data. |

| Nested CV | Outer loop for performance estimation (subject-level split), inner loop for hyperparameter tuning. | Gold Standard. Provides unbiased performance estimate. | Computationally intensive; requires careful design. |

| Split-Half or Hold-Out | Single split into training and test sets at the subject level. | Acceptable for large datasets. Simple and clear. | High variance estimate; wasteful of data. |

Detailed Experimental Protocols

Protocol 1: Nested Cross-Validation for Unbiased Estimation

- Aim: To obtain a statistically rigorous estimate of model performance while optimizing hyperparameters without leakage.

- Procedure:

- Outer Loop (Performance Estimation): Split all subjects into K folds (e.g., 5 or 10). For each fold

i:- Hold out fold

ias the outer test set. - The remaining K-1 folds form the outer training set.

- Hold out fold

- Inner Loop (Model Selection): On the outer training set, perform a second, independent CV loop (e.g., 5-fold) respecting subject boundaries.

- This inner loop is used to select optimal hyperparameters (e.g., via grid search) and/or perform feature selection.

- The best model configuration from the inner loop is retrained on the entire outer training set.

- Testing: The final retrained model is evaluated once on the held-out outer test set (fold

i). - Aggregation: The process repeats for all K outer folds. The average performance across all outer test folds is the final unbiased estimate.

- Outer Loop (Performance Estimation): Split all subjects into K folds (e.g., 5 or 10). For each fold

- Critical Note: Feature selection must be repeated within each inner loop to prevent leakage of information from the validation fold back into the training process.

Protocol 2: Leave-One-Site-Out Cross-Validation

- Aim: To assess model generalizability across data acquisition sites or scanners.

- Procedure:

- For a dataset comprising subjects from

Sdifferent sites (or scanners), iterate over each sitej. - Designate all data from site

jas the test set. - Use all data from the remaining

S-1sites as the training set. - Train the model on the training set. Optional but recommended: Perform hyperparameter tuning via nested CV within the (S-1)-site training set.

- Evaluate the trained model on the completely unseen site

j. - Repeat for all sites. Report performance metrics for each left-out site separately and as an average.

- For a dataset comprising subjects from

- Interpretation: A significant drop in performance on left-out sites compared to subject-level CV within a single site indicates strong site effects and poor generalizability.

Visualizations

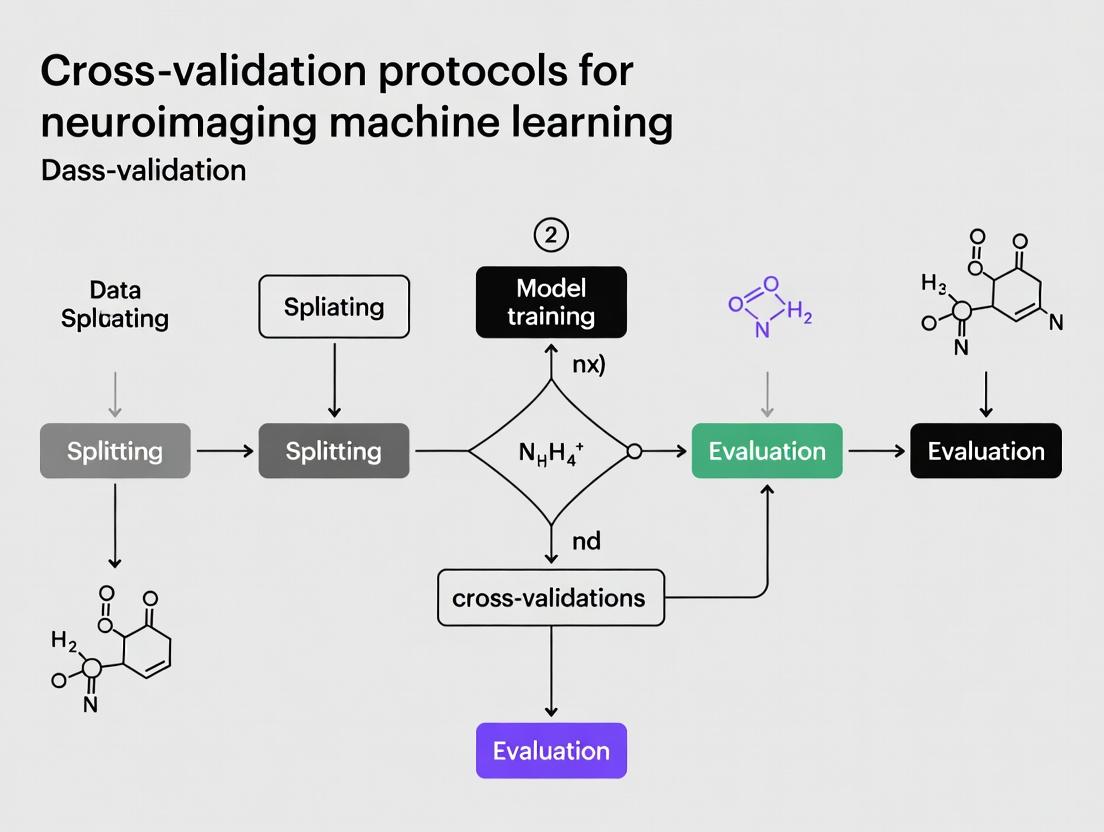

Title: Why Standard CV Fails for Neuroimaging

Title: Nested Cross-Validation Protocol

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 3: Key Tools for Robust Neuroimaging ML Validation

| Item / Solution | Category | Function / Purpose |

|---|---|---|

| Nilearn | Software Library | Provides scikit-learn compatible tools for neuroimaging data, with built-in functions for subject-level CV splitting. |

scikit-learn GroupShuffleSplit |

Algorithm Utility | Critical for ensuring no same-subject data across train/test splits (using subject ID as the group parameter). |

| ComBat / NeuroHarmonize | Data Harmonization Tool | Removes site and scanner effects from extracted features before model training, improving multi-site generalizability. |

| Permutation Testing | Statistical Test | Non-parametric method to establish the significance of model performance against the null distribution (e.g., using permuted labels). |

| ABIDE, ADNI, UK Biobank | Reference Datasets | Large-scale, multi-site neuroimaging datasets that require subject- and site-level CV protocols, serving as benchmarks. |

| Datalad / BIDS | Data Management | Ensures reproducible data structuring (Brain Imaging Data Structure) and version control, crucial for tracking subject-wise splits. |

| Nistats / SPM / FSL | Preprocessing Pipelines | Standardized extraction of features (e.g., ROI timeseries, voxel-based morphometry maps) which become inputs for ML models. |

Within neuroimaging machine learning research, constructing predictive brain models necessitates a rigorous understanding of model error components—bias, variance, and their interplay—to ensure generalizability to new populations and clinical settings. This document provides application notes and protocols framed within a thesis on cross-validation, detailing how to diagnose, quantify, and mitigate these issues.

Core Definitions and Quantitative Framework

Table 1: Core Error Components in Predictive Brain Modeling

| Component | Mathematical Definition | Manifestation in Neuroimaging ML | Impact on Generalizability |

|---|---|---|---|

| Bias | $ \text{Bias}[\hat{f}(x)] = E[\hat{f}(x)] - f(x) $ | Underfitting; systematic error from oversimplified model (e.g., linear model for highly nonlinear brain dynamics). | High bias leads to consistently poor performance across datasets (poor external validation). |

| Variance | $ \text{Var}[\hat{f}(x)] = E[(\hat{f}(x) - E[\hat{f}(x)])^2] $ | Overfitting; excessive sensitivity to noise in training data (e.g., complex deep learning on small fMRI datasets). | High variance causes large performance drops between training/test sets and across sites. |

| Irreducible Error | $ \sigma_\epsilon^2 $ | Measurement noise (scanner drift, physiological noise) and stochastic biological variability. | Fundamental limit on prediction accuracy, even with a perfect model. |

| Expected Test MSE | $ E[(y - \hat{f}(x))^2] = \text{Bias}[\hat{f}(x)]^2 + \text{Var}[\hat{f}(x)] + \sigma_\epsilon^2 $ | Total error on unseen data, decomposable into the above components. | Direct measure of model generalizability. |

Table 2: Typical Quantitative Indicators from Cross-Validation Studies

| Metric / Observation | Suggests High Bias | Suggests High Variance | Target Range for Generalizability |

|---|---|---|---|

| Train vs. Test Performance | Both train and test error are high. | Train error is very low, test error is much higher. | Small, consistent gap (e.g., <5-10% AUC difference). |

| Cross-Validation Fold Variance | Low variance in scores across folds. | High variance in scores across folds. | Low variance across folds (stable predictions). |

| Multi-Site Validation Drop | Consistently poor performance across all external sites. | High performance variability across external sites; severe drops at some. | Robust performance (e.g., AUC drop < 0.05) across independent cohorts. |

Detailed Experimental Protocols for Diagnosis

Protocol 1: Bias-Variance Decomposition via Bootstrapped Learning Curves

Objective: Diagnose whether a brain phenotype prediction model suffers primarily from bias or variance.

Materials: Preprocessed neuroimaging data (e.g., fMRI connectivity matrices, structural volumes) with target labels.

Procedure:

- Data Preparation: Hold out a definitive test set (20-30%) for final evaluation. Use the remainder for analysis.

- Bootstrap Sampling: Generate B (e.g., 100) bootstrap samples from the training pool.

- Iterative Training: For each sample size n (e.g., 10%, 20%, ..., 100% of training pool):

- Train an instance of your model on the first n instances of each bootstrap sample.

- Record the prediction error on the full training pool and the held-out test set for each trained model.

- Calculation: For each sample size n:

- Average Training Error: Calculate the mean error across all B models. This approximates E[Training Error].

- Average Test Error: Calculate the mean test error across all B models. This is the Expected Test Error.

- Variance Estimation: Compute the variance of the predictions for each data point across the B models, then average across all data points.

- Visualization & Interpretation: Plot learning curves (sample size vs. error).

- High Bias Indicator: Both training and test error converge to a high value as n increases.

- High Variance Indicator: A large gap between training and test error that narrows slowly as n increases.

Protocol 2: Nested Cross-Validation for Generalizability Assessment

Objective: Obtain an unbiased estimate of model performance and its variance across different data partitions.

Procedure:

- Outer Loop (Performance Estimation): Split full dataset into K folds (e.g., 5 or 10). For each outer fold k:

- Hold out fold k as the test set.

- Use the remaining K-1 folds for the inner loop.

- Inner Loop (Model Selection & Tuning): On the K-1 outer training folds:

- Perform a second, independent cross-validation to select optimal hyperparameters (e.g., regularization strength, kernel type).

- Do not use the held-out outer test set for any decision.

- Final Training & Testing: Train a final model on the entire K-1 outer training folds using the optimal hyperparameters. Evaluate it on the held-out outer test fold k.

- Aggregation: After iterating through all K outer folds, aggregate the test set performances (e.g., mean AUC, accuracy). The standard deviation of these K scores estimates the performance variance due to data sampling.

Diagram Title: Nested Cross-Validation Workflow for Unbiased Estimation

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Resources for Robust Brain Model Development

| Resource Category | Specific Example / Tool | Function in Managing Bias/Variance |

|---|---|---|

| Standardized Data | UK Biobank Neuroimaging, ABCD Study, Alzheimer's Disease Neuroimaging Initiative (ADNI) | Provides large, multi-site datasets to reduce variance from small samples and allow for meaningful external validation. |

| Feature Extraction Libraries | Nilearn (Python), CONN toolbox (MATLAB), FSL, FreeSurfer | Provides consistent, validated methods for deriving features from raw images, reducing bias from ad-hoc preprocessing. |

| ML Frameworks with CV | scikit-learn (Python), BrainIAK, nilearn.decoding | Offer built-in, standardized implementations of nested CV, bootstrapping, and regularization methods. |

| Regularization Tools | L1/L2 (Ridge/Lasso) in scikit-learn, Dropout in PyTorch/TensorFlow | Directly reduces model variance by penalizing complexity or enabling robust ensemble learning. |

| Harmonization Tools | Combat, NeuroHarmonize, Density-Based | Mitigates site/scanner-induced variance (bias) in multi-center data, improving generalizability. |

| Model Cards / Reporting | TRIPOD+ML checklist, Model Card Toolkit | Framework for transparent reporting of training conditions, evaluation, and known biases. |

Advanced Protocols for Enhancing Generalizability

Protocol 3: Domain Adaptation for Multi-Site fMRI Classification

Objective: Adapt a classifier trained on a source imaging site to perform well on a target site with different acquisition parameters.

Materials: Labeled data from source site (ample), and labeled or unlabeled data from target site.

Procedure:

- Feature Extraction: Extract identical features (e.g., ROI time series correlations) from both source and target datasets.

- Harmonization: Apply a domain adaptation algorithm (e.g., Combat or Domain-Adversarial Neural Network - DANN) to align the feature distributions of the source and target data.

- Model Training: Train the predictive model on the harmonized source data (and optionally a small subset of labeled target data).

- Validation: Test the model on the held-out, harmonized target data. Compare performance to a model trained on source data without adaptation.

Diagram Title: Domain Adaptation Workflow for Multi-Site Data

Cross-validation (CV) is a cornerstone of robust machine learning in neuroimaging, designed to estimate model generalizability while mitigating overfitting. The choice of CV protocol is critical and is dictated by the data structure, sample size, and overarching research question. This document details the primary CV protocols, their applications, and implementation guidelines within neuroimaging research for drug development and biomarker discovery.

Core Cross-Validation Protocols: Methodologies & Applications

k-Fold Cross-Validation

Experimental Protocol:

- Partition: Randomly shuffle the entire dataset and split it into k approximately equal-sized, disjoint folds (typical k = 5 or 10).

- Iterate: For i = 1 to k: a. Designate fold i as the test set. b. Use the remaining k-1 folds as the training set. c. Train the model on the training set. d. Evaluate the model on the held-out test fold, recording performance metrics (e.g., accuracy, AUC).

- Aggregate: Compute the final model performance as the mean (and standard deviation) of the performance across all k iterations.

Use Case: Standard protocol for homogeneous, single-site neuroimaging datasets with ample sample size. Provides a stable estimate of generalization error.

Stratified k-Fold Cross-Validation

Experimental Protocol:

- Follow the standard k-fold procedure, but ensure that each fold maintains the same class (or group) proportion as the original dataset.

- This is achieved by stratifying the data based on the target label prior to splitting.

Use Case: Essential for imbalanced datasets (e.g., more control subjects than patients) to prevent folds with zero representation of a minority class.

Leave-One-Subject-Out (LOSO) Cross-Validation

Experimental Protocol:

- Partition: For a dataset with N subjects, create N folds. Each fold consists of all data from a single, unique subject as the test set.

- Iterate: For each subject s: a. Use all data from subject s as the test set. b. Use all data from the remaining N-1 subjects as the training set. c. Train and evaluate the model as above.

- Aggregate: Average performance across all N subjects.

Use Case: Ideal for datasets with small sample sizes or where data from each subject is numerous and correlated (e.g., multiple trials or time points per subject). It is a special case of k-fold where k = N.

Leave-One-Group-Out (LOGO) / Leave-One-Site-Out (LOSO) Cross-Validation

Experimental Protocol:

- Partition: Identify the grouping factor G (e.g., MRI scanner site, clinical center, study cohort). Create one fold for each unique group.

- Iterate: For each group g: a. Designate all data from group g as the test set. b. Use all data from all other groups as the training set. c. Train and evaluate the model.

- Aggregate: Average performance across all held-out groups.

Use Case: Critical for multi-site neuroimaging studies. This protocol tests a model's ability to generalize to completely unseen data collection sites, addressing scanner variability, protocol differences, and population heterogeneity—a key requirement for clinically viable biomarkers.

Table 1: Quantitative & Qualitative Comparison of Key CV Protocols

| Protocol | Typical k Value | Test Set Size per Iteration | Key Advantage | Key Limitation | Ideal Neuroimaging Use Case |

|---|---|---|---|---|---|

| k-Fold | 5 or 10 | ~1/k of data | Low variance estimate; computationally efficient. | May produce optimistic bias in structured data. | Homogeneous, single-site data with N > 100. |

| Stratified k-Fold | 5 or 10 | ~1/k of data | Preserves class balance; reliable for imbalanced data. | Does not account for data clustering (e.g., within-subject). | Imbalanced diagnostic classification (e.g., AD vs HC). |

| Leave-One-Subject-Out (LOSO) | N (subjects) | 1 subject's data | Maximizes training data; unbiased for small N. | High computational cost; high variance estimate. | Small-N studies or task-fMRI with many trials per subject. |

| Leave-One-Site-Out (LOSO) | # of sites | All data from 1 site | True test of generalizability across sites/scanners. | Can have high variance if sites are few; large training-test distribution shift. | Multi-site clinical trials & consortium data (e.g., ADNI, ABIDE). |

Table 2: Impact of CV Choice on Reported Model Performance (Hypothetical Example)

| CV Protocol | Reported Accuracy (Mean ± Std) | Reported AUC | Interpretation in Context |

|---|---|---|---|

| 10-Fold (Single-Site) | 92.5% ± 2.1% | 0.96 | High performance likely inflated by site-specific noise. |

| LOSO (Multi-Site) | 78.3% ± 8.7% | 0.83 | More realistic estimate of performance on new data from a new site. |

| Leave-One-Site-Out | 74.1% ± 10.5% | 0.80 | Most rigorous estimate, directly assessing cross-site robustness. |

Workflow Diagram: Protocol Selection for Neuroimaging ML

Title: Decision Tree for Selecting Neuroimaging CV Protocols

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Software & Libraries for Implementing CV Protocols

| Item / "Reagent" | Category | Function / Purpose | Example (Python) |

|---|---|---|---|

| Scikit-learn | Core Library | Provides ready-to-use implementations of k-Fold, StratifiedKFold, LeaveOneGroupOut, and GroupKFold. | from sklearn.model_selection import GroupKFold |

| NiLearn | Neuroimaging-specific | Tools for loading neuroimaging data and integrating with scikit-learn CV splitters. | from nilearn.connectome import GroupShuffleSplit |

| PyTorch / TensorFlow | Deep Learning Frameworks | For custom CV loops when training complex neural networks on image data. | Custom DataLoaders for site-specific splits. |

| Pandas / NumPy | Data Manipulation | Essential for managing subject metadata, site labels, and organizing folds. | Creating a groups array for LOGO. |

| Matplotlib / Seaborn | Visualization | Plotting CV fold schematics and result distributions (e.g., box plots per site). | Visualizing performance variance across LOSO folds. |

| COINSTAC | Decentralized Analysis Platform | Enables federated learning and cross-validation across distributed data without sharing raw images. | Privacy-preserving multi-site validation. |

Detailed Experimental Protocol: Leave-One-Site-Out for a Multi-Site fMRI Study

Aim: To develop a classifier for Major Depressive Disorder (MDD) that generalizes across different MRI scanners and recruitment sites.

Preprocessing:

- Acquire T1-weighted and resting-state fMRI data from 4 sites (S1, S2, S3, S4).

- Standardize preprocessing using a BIDS-app (e.g., fMRIPrep) to minimize pipeline differences.

- Extract features from fMRI data (e.g., functional connectivity matrices).

- Create a master DataFrame with columns: [

Subject_ID,Features,Diagnosis,Site].

CV Implementation Script (Python Pseudocode):

Diagram: Leave-One-Site-Out Validation Workflow

Title: LOSO Workflow for Multi-Site Generalizability Test

Application Notes

In neuroimaging machine learning (ML) research, rigorous cross-validation (CV) is paramount to produce generalizable, clinically relevant models. Failure to correctly define the target of inference and ensure statistical independence between training and validation data leads to data leakage, producing grossly optimistic performance estimates that fail to translate to real-world applications. These concepts form the core of a robust validation thesis.

Data Leakage: The inadvertent sharing of information between the training and test datasets, violating the assumption of independence. In neuroimaging, this often occurs during pre-processing (e.g., site-scanner normalization using all data) or when splitting non-independent observations (e.g., multiple samples from the same subject across folds).

Independence: The fundamental requirement that the data used to train a model provides no information about the data used to test it. The unit of independence must align with the Target of Inference—the entity to which model predictions will generalize (e.g., new patients, new sessions, new sites).

Target of Inference: The independent unit on which predictions will be made in deployment. This dictates the appropriate level for data splitting. For a model intended to diagnose new patients, the patient is the unit of independence; for a model to predict cognitive state in new sessions from known patients, the session is the unit.

Experimental Protocols

Protocol 1: Nested Cross-Validation for Hyperparameter Tuning and Performance Estimation

Objective: To provide an unbiased estimate of model performance while tuning hyperparameters, with independence maintained according to the target.

- Define Cohort: Assemble neuroimaging dataset (e.g., fMRI, sMRI) with associated labels.

- Declare Target of Inference: Explicitly state the unit of generalization (e.g., "new subject").

- Outer Split: Partition the data at the level of the target unit (e.g., by Subject ID) into K outer folds.

- Iterate Outer Loop: For each of K iterations: a. Hold out one outer fold as the Test Set. b. The remaining K-1 folds constitute the Development Set.

- Inner Loop (on Development Set): a. Partition the Development Set into L inner folds, again respecting the target unit. b. Iteratively train on L-1 inner folds, validate on the held-out inner fold across a grid of hyperparameters. c. Select the hyperparameter set yielding the best average validation performance.

- Train Final Model: Train a new model on the entire Development Set using the selected optimal hyperparameters.

- Evaluate: Apply this model to the held-out Test Set from step 4a. Record performance metric (e.g., AUC, accuracy).

- Repeat: Iterate steps 4-7 until each outer fold has served as the test set once.

- Report: The mean and standard deviation of performance across all K outer test folds is the unbiased performance estimate.

Protocol 2: Preventing Leakage in Feature Pre-processing

Objective: To ensure normalization or feature derivation does not introduce information from the test set into the training pipeline.

- Split First: Perform the train-test or outer CV split based on the target unit before any data-driven pre-processing.

- Fit Transformers on Training Data Only: For operations like:

- Scanner/Site Effect Correction: (ComBat) Fit parameters (mean, variance) using only the training data.

- Voxel-wise Normalization (e.g., Z-scoring): Calculate mean and standard deviation per feature from only the training data.

- Principal Component Analysis (PCA): Derive component loadings from only the training data.

- Apply to Training & Test Data: Use the parameters/loadings from step 2 to transform both the training and the held-out test data.

- CV Iteration: In nested CV, this fit/apply process must be repeated freshly within each inner and outer loop to prevent leakage across folds.

Table 1: Impact of Data Leakage on Reported Model Performance (Simulated sMRI Classification Study)

| Splitting Protocol | Unit of Independence | Reported AUC (Mean ± SD) | Estimated Generalizes to |

|---|---|---|---|

| Random Voxel Splitting | Voxel | 0.99 ± 0.01 | Nowhere (Severe Leakage) |

| Scan Session Splitting | Session | 0.92 ± 0.04 | New Sessions |

| Subject Splitting (Correct) | Subject | 0.75 ± 0.07 | New Subjects |

| Site Splitting (Multi-site Study) | Site | 0.65 ± 0.10 | New Sites/Scanners |

Table 2: Recommended Splitting Strategy by Target of Inference

| Target of Inference | Example Research Goal | Appropriate Splitting Unit | Inappropriate Splitting Unit |

|---|---|---|---|

| New Subject | Diagnostic biomarker for a disease. | Subject ID | Scan Session, Voxel, Timepoint |

| New Session for Known Subject | Predicting treatment response from a baseline scan. | Scan Session / Timepoint | Voxel or Region of Interest (ROI) |

| New Site/Scanner | A classifier deployable across different hospitals. | Data Acquisition Site | Subject (if nested within site) |

Mandatory Visualization

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions for Neuroimaging ML Validation

| Item / Software | Function / Purpose |

|---|---|

| NiBabel / Nilearn | Python libraries for reading/writing neuroimaging data (NIfTI) and embedding ML pipelines with correct CV structures. |

| scikit-learn | Provides robust, standardized implementations of CV splitters (e.g., GroupKFold, LeaveOneGroupOut). |

| ComBat Harmonization | Algorithm for removing site/scanner effects. Must be applied within each CV fold to prevent leakage. |

| MNIPython (NiLearn) | Tools for feature extraction from brain regions, which must be performed post-split or with careful folding. |

| Hyperopt / Optuna | Frameworks for advanced hyperparameter optimization that can be integrated into nested CV loops. |

| Dummy Classifier | A simple baseline model (e.g., stratified, most frequent). Performance must be significantly better than this. |

| PREDICT-AI/ML-CVE | Emerging reporting guidelines and checklists specifically designed to prevent data leakage in ML studies. |

Neuroimaging data for machine learning presents unique challenges that violate standard assumptions in statistical learning. The features are inherently high-dimensional, spatially/temporally correlated, and observations are not independent and identically distributed (Non-IID). This necessitates specialized cross-validation (CV) protocols to avoid biased performance estimates and ensure generalizable models in clinical and drug development research.

Quantitative Characterization of Neuroimaging Data Properties

Table 1: Quantitative Profile of Typical Neuroimaging Dataset Challenges

| Data Property | Typical Scale/Range | Impact on ML | Common Metric |

|---|---|---|---|

| Feature-to-Sample Ratio (p/n) | (10^3) - (10^6) features : (10^1) - (10^2) samples | High risk of overfitting; requires strong regularization. | Dimensionality Curse Index |

| Spatial Autocorrelation (fMRI/MRI) | Moran’s I: 0.6 - 0.95 | Violates feature independence; inflates feature importance. | Moran’s I, Geary’s C |

| Temporal Autocorrelation (fMRI) | Lag-1 autocorrelation: 0.2 - 0.8 | Non-IID samples; reduces effective degrees of freedom. | Auto-correlation Function (ACF) |

| Site/Scanner Variance | Cohen’s d between sites: 0.3 - 1.2 | Introduces batch effects; creates non-IID structure. | ComBat-adjusted (\hat{\sigma}^2) |

| Intra-Subject Correlation | ICC(3,1): 0.4 - 0.9 for within-subject repeats | Multiple scans per subject are Non-IID. | Intraclass Correlation Coefficient |

Application Notes: Cross-Validation Protocols for Non-IID Data

Nested Cross-Validation with Stratification

Purpose: To provide an unbiased estimate of model performance when tuning hyperparameters on correlated, high-dimensional data.

Protocol 3.1: Nested CV for Neuroimaging

- Outer Loop (Performance Estimation):

- Split data into K folds (e.g., K=5 or 10). Critical: Ensure all data from a single participant is contained within one fold to respect the Non-IID assumption (Subject-Level Splitting).

- Inner Loop (Model Selection):

- For each outer training set, perform another CV loop.

- Use this loop to select optimal hyperparameters (e.g., regularization strength for an SVM or Lasso) via grid/random search.

- Model Training & Evaluation:

- Train the model with selected hyperparameters on the entire outer training set.

- Evaluate the trained model on the held-out outer test fold.

- Aggregation:

- Repeat for all outer folds. The mean performance across all outer test folds is the final unbiased estimate.

Leave-One-Site-Out Cross-Validation (LOSO-CV)

Purpose: To estimate model generalizability across unseen imaging sites or scanners, a critical step for multi-center trials.

Protocol 3.2: LOSO-CV

- Partitioning: For a dataset with data from

Sunique scanning sites, iteratively designate data from one site as the test set, and pool data from the remainingS-1sites as the training set. - Site-Level Confound Adjustment: Apply harmonization tools (e.g., ComBat, pyHarmonize) to the training set. Important: Fit the harmonization parameters only on the training set, then transform both training and test sets.

- Feature Selection: Perform voxel-wise or ROI-based feature selection (e.g., ANOVA) only on the harmonized training set. Apply the same mask to the test set.

- Training & Testing: Train the model on the harmonized training set and evaluate on the left-out site's data. Repeat for all sites.

Repeated Hold-Group-Out for Longitudinal Data

Purpose: To validate predictive models on data from future timepoints, simulating a real-world prognostic task.

Protocol 3.3: Longitudinal Validation

- Temporal Sorting: Order participants or scans by time of acquisition (e.g., baseline, 6-month, 12-month).

- Training Set Definition: Use an early time segment (e.g., all baseline scans) for training.

- Test Set Definition: Use a later, mutually exclusive time segment (e.g., all 12-month scans from subjects not in the training set) for testing.

- Replication: Repeat the process, sliding the training window forward in time (e.g., train on 6-month, test on 24-month), to assess temporal decay of model performance.

Visualization of Protocols and Data Relationships

Diagram 1: Non-IID Neuroimaging ML Pipeline

Diagram 2: Leave-One-Site-Out CV Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Neuroimaging ML with Non-IID Data

| Tool Category | Specific Solution/Software | Primary Function | Key Consideration for Non-IID Data |

|---|---|---|---|

| Data Harmonization | ComBat (neuroCombat), pyHarmonize | Removes site/scanner effects while preserving biological signal. | Must be applied within CV loops to prevent data leakage. |

| Feature Reduction | PCA with ICA, Anatomical Atlas ROI summaries, Sparse Dictionary Learning | Reduces dimensionality and manages spatial correlation. | Stability selection across CV folds is crucial for reliability. |

| ML Framework with CV | scikit-learn, nilearn, NiMARE | Provides implemented CV splitters (e.g., GroupKFold, LeaveOneGroupOut). |

Use custom splitters based on subject ID or site ID, not random splits. |

| Non-IID CV Splitters | GroupShuffleSplit, LeavePGroupsOut (in scikit-learn) |

Ensures data from a single group (subject/site) is not split across train/test. | Foundational for any valid performance estimate. |

| Performance Metrics | Balanced Accuracy, Matthews Correlation Coefficient (MCC) | Robust metrics for imbalanced clinical datasets common in neuroimaging. | Always report with confidence intervals from outer CV folds. |

| Model Interpretability | SHAP, Permutation Feature Importance, Saliency Maps | Interprets model decisions in the presence of correlated features. | Permutation importance must be recalculated per fold; group-wise permutation recommended. |

Implementation in Practice: Step-by-Step Methodological Protocols and Code Considerations

This protocol is developed within the context of a comprehensive thesis on cross-validation (CV) methodologies for neuroimaging machine learning (ML). In neuroimaging-based prediction (e.g., of disease status, cognitive scores, or treatment response), unbiased performance estimation is paramount due to high-dimensional data, small sample sizes, and inherent risk of overfitting. Standard k-fold CV can lead to optimistically biased estimates due to "information leakage" from the model selection and hyperparameter tuning process. Nested cross-validation (NCV) is widely regarded as the gold standard for obtaining a nearly unbiased estimate of a model's true generalization error when a complete pipeline, including feature selection and hyperparameter optimization, must be evaluated.

Nested CV employs two levels of cross-validation: an outer loop for performance estimation and an inner loop for model selection.

Table 1: Comparison of Cross-Validation Schemes in Neuroimaging ML

| Scheme | Purpose | Bias Risk | Computational Cost | Recommended Use Case |

|---|---|---|---|---|

| Hold-Out | Preliminary testing | High (High variance) | Low | Very large datasets only |

| Simple k-Fold CV | Performance estimation | Moderate (Leakage if tuning is done on same folds) | Moderate | Final model evaluation only if no hyperparameter tuning is needed |

| Train/Validation/Test Split | Model selection & evaluation | Low if validation/test are truly independent | Low | Large datasets |

| Nested k x l-Fold CV | Unbiased performance estimation with tuning | Very Low | High (k * l models) | Small-sample neuroimaging studies (Standard) |

Table 2: Typical Parameter Space for Hyperparameter Tuning (Inner Loop)

| Algorithm | Common Hyperparameters | Typical Search Method | Notes for Neuroimaging |

|---|---|---|---|

| SVM (Linear) | C (regularization) | Logarithmic grid (e.g., 2^[-5:5]) | Most common; sensitive to C |

| SVM (RBF) | C, Gamma | Random or grid search | Computationally intensive; risk of overfitting |

| Elastic Net / Lasso | Alpha (L1/L2 ratio), Lambda (penalty) | Coordinate descent over grid | Built-in feature selection |

| Random Forest | Number of trees, Max depth, Min samples split | Random search | Robust but less interpretable |

Experimental Protocol: Nested Cross-Validation for an fMRI Classification Study

This protocol details the steps for implementing nested CV to estimate the performance of a classifier predicting disease state (e.g., Alzheimer's vs. Control) from fMRI-derived features.

Protocol: Nested 5x5-Fold Cross-Validation

Objective: To obtain an unbiased estimate of classification accuracy, sensitivity, and specificity for a Support Vector Machine (SVM) classifier with hyperparameter tuning on voxel-based morphometry (VBM) data.

I. Preprocessing & Outer Loop Setup

- Data: N=100 participants (50 patients, 50 controls). Preprocessed VBM maps (features: ~100,000 voxels).

- Outer Loop (Performance Estimation): Partition the entire dataset (N=100) into 5 outer folds (Stratified to preserve class ratio). Each fold contains 80 training and 20 test samples. This process repeats 5 times (5 outer splits).

II. Inner Loop Execution (Within a Single Outer Training Set) For each of the 5 outer training sets (n=80):

- The outer training set is designated as the temporary "whole dataset" for the inner loop.

- Split this temporary dataset (n=80) into 5 inner folds (Stratified).

- Hyperparameter Grid: Define

Cvalues = [2^-3, 2^-1, 2^1, 2^3, 2^5]. - For each candidate

Cvalue: a. Train an SVM on 4 inner folds (n=64) and validate on the held-out 1 inner fold (n=16). Repeat for all 5 inner folds (5-fold CV within the inner loop). b. Calculate the mean validation accuracy across the 5 inner folds for thisC. - Select the

Cvalue yielding the highest mean inner validation accuracy. - Retrain an SVM with this optimal

Con the entire outer training set (n=80). This is the final model for this outer split.

III. Outer Loop Evaluation

- Evaluate the retrained model from Step II.6 on the held-out outer test set (n=20), which has never been used for model selection or tuning.

- Record the performance metrics (accuracy, sensitivity, specificity) for this outer fold.

- Repeat Sections II & III for all 5 outer folds.

IV. Final Performance Estimation

- Aggregate the performance metrics from the 5 outer test folds.

- Report the mean and standard deviation (e.g., Accuracy: 78.0% ± 4.2%) as the unbiased estimate of generalization performance.

- Important: No single "final model" is produced by NCV. To deploy a model, retrain on the entire dataset using the hyperparameters selected via a final, simple k-fold CV on all data.

Workflow Diagram

Diagram Title: Nested 5x5-Fold Cross-Validation Workflow

The Scientist's Toolkit: Essential Research Reagents & Software

Table 3: Key Research Reagent Solutions for NCV in Neuroimaging ML

| Item / Resource | Function / Purpose | Example/Note |

|---|---|---|

Scikit-learn (sklearn) |

Primary Python library for implementing NCV (GridSearchCV, cross_val_score), ML models, and metrics. |

Use sklearn.model_selection for StratifiedKFold, GridSearchCV. |

| NiBabel / Nilearn | Python libraries for loading, manipulating, and analyzing neuroimaging data (NIfTI files). | Nilearn integrates with scikit-learn for brain-specific decoding. |

| Stratified k-Fold Splitters | Ensures class distribution is preserved in each train/test fold, critical for imbalanced clinical datasets. | StratifiedKFold in scikit-learn. |

| High-Performance Computing (HPC) Cluster | NCV is computationally expensive (k*l model fits). Parallelization on HPC or cloud computing is often essential. | Distribute outer or inner loops across CPUs. |

| Hyperparameter Optimization Libraries | Advanced alternatives to exhaustive grid search for higher-dimensional parameter spaces. | Optuna, scikit-optimize, Ray Tune. |

| Metric Definition | Clear definition of performance metrics relevant to the clinical/scientific question. | Accuracy, Balanced Accuracy, ROC-AUC, Sensitivity, Specificity. |

| Random State Seed | A fixed random seed ensures the reproducibility of data splits and stochastic algorithms. | Critical for replicating results. Set random_state parameter. |

Advanced Considerations & Protocol Variations

Leave-One-Out Outer Loop (LOO-NCV)

For extremely small samples (N < 50), use LOO for the outer loop.

- Protocol: Each sample serves as the outer test set once. The model is trained on N-1 samples, with hyperparameters tuned via k-fold CV on those N-1 samples. Reports nearly unbiased but high-variance estimates.

- Diagram:

Diagram Title: Leave-One-Out Nested Cross-Validation (LOO-NCV)

Incorporating Feature Selection

Feature selection (e.g., ANOVA F-test, recursive feature elimination) must be included within the inner loop to prevent leakage.

- Protocol Modification: Within each inner CV fold, perform feature selection only on the inner training split, then transform both the inner training and validation splits. The selected feature set can vary across inner folds and outer splits.

Implementing nested cross-validation is a computationally intensive but non-negotiable practice for rigorous neuroimaging machine learning. It provides a robust defense against optimistic bias, ensuring that reported performance metrics reflect the true generalizability of the analytic pipeline to unseen data. Adherence to this protocol, including careful separation of tuning and testing phases, will yield more reliable, reproducible, and clinically interpretable predictive models.

Within neuroimaging machine learning research, the aggregation of data across multiple sites is essential for increasing statistical power and generalizability. However, this introduces technical and biological heterogeneity, known as batch effects or site effects, which can confound analysis and lead to spurious results. This document details the application of two critical methodologies: Cross-Validation Across Sites (CVAS), a robust evaluation scheme, and ComBat, a harmonization tool for site-effect removal. These protocols are framed as essential components of a rigorous cross-validation thesis, ensuring models generalize to unseen populations and sites.

Core Concepts & Definitions

Site Effect / Batch Effect: Non-biological variance introduced by differences in scanner manufacturer, model, acquisition protocols, calibration, and patient populations across data collection sites.

Harmonization: The process of removing technical site effects while preserving biological signals of interest.

Cross-Validation Across Sites (CVAS): A validation strategy where data from one or more entire sites are held out as the test set, ensuring a strict evaluation of a model's ability to generalize to completely unseen data sources.

ComBat: An empirical Bayes method for removing batch effects, initially developed for genomics and now widely adapted for neuroimaging features (e.g., cortical thickness, fMRI metrics).

Experimental Protocols

Protocol for Cross-Validation Across Sites (CVAS)

Objective: To assess the generalizability of a machine learning model to entirely new scanning sites.

Workflow:

- Data Partitioning: Group all samples by their site of origin. Let

S = {S1, S2, ..., Sk}representkunique sites. - Iterative Hold-Out: For

i = 1tok: a. Test Set: Assign all data from siteSias the test set. b. Training/Validation Set: Pool data from all remaining sitesS \ {Si}. c. Internal Validation: Within the pooled training data, perform a nested cross-validation (e.g., 5-fold) for model hyperparameter tuning. Critically, this internal cross-validation must also be performed across sites within the training pool to avoid leakage. d. Model Training: Train the final model with optimized hyperparameters on the entire pooled training set. e. Testing: Evaluate the trained model on the held-out siteSi. Record performance metrics (e.g., accuracy, AUC, MAE). - Aggregate Performance: Calculate the mean and standard deviation of the performance metrics across all

ktest folds (sites). This represents the model's site-independent performance.

Protocol for ComBat Harmonization

Objective: To adjust site effects in feature data prior to model development.

Workflow:

- Feature Extraction: Extract neuroimaging features (e.g., ROI volumes, fMRI connectivity matrices) for all subjects across all sites.

- Input Matrix Preparation: Create a feature matrix

X(subjects x features). Define:Batch: A categorical vector indicating the site/scanner for each subject.Covariates: A matrix of biological/phenotypic variables of interest to preserve (e.g., age, diagnosis, sex).

- Model Selection:

- Standard ComBat: Assumes a linear model of the form

feature = mean + site_effect + error. It estimates and removes additive (shift) and multiplicative (scale) site effects. - ComBat with Covariates (ComBat-C): Extends the model to

feature = mean + covariates + site_effect + error. This protects biological signals associated with the specified covariates during harmonization.

- Standard ComBat: Assumes a linear model of the form

- Estimation & Adjustment: The empirical Bayes procedure: a. Standardizes features within each site (mean-centering and scaling). b. Estimates prior distributions for the site effect parameters from all features. c. Shrinks the site-effect parameter estimates for each feature toward the common prior, improving stability for small sample sizes. d. Applies the adjusted parameters to standardize the data, removing the site effects.

- Output: A harmonized feature matrix

X_harmonizedwhere site effects are minimized, and biological variance is retained.

Data & Comparative Analysis

Table 1: Performance Comparison of Validation Strategies (Simulated Classification Task)

| Validation Scheme | Mean Accuracy (%) | Accuracy SD (%) | AUC | Notes |

|---|---|---|---|---|

| Random 10-Fold CV | 92.5 | 2.1 | 0.96 | Overly optimistic; data leakage across sites. |

| CVAS | 74.3 | 8.7 | 0.81 | Realistic estimate of performance on new sites. |

| CVAS on ComBat-Harmonized Data | 78.9 | 7.2 | 0.85 | Harmonization improves generalizability and reduces variance across sites. |

Table 2: Impact of ComBat Harmonization on Feature Variance (Example Dataset)

| Feature (ROI Volume) | Variance Before Harmonization (a.u.) | Variance After ComBat (a.u.) | % Variance Reduction (Site-Related) |

|---|---|---|---|

| Right Hippocampus | 15.4 | 10.1 | 34.4% |

| Left Amygdala | 9.8 | 7.3 | 25.5% |

| Total Gray Matter | 45.2 | 42.5 | 6.0% |

| Mean Across All Features | 22.7 | 16.4 | 27.8% |

Visualization of Workflows

Title: CVAS Workflow for Robust Site-Generalizable Evaluation

Title: ComBat Harmonization Protocol Steps

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 3: Key Tools for Multi-Site Neuroimaging Analysis

| Item/Category | Example/Tool Name | Function & Rationale |

|---|---|---|

| Harmonization Software | neuroComBat (Python), ComBat (R) |

Implements the empirical Bayes harmonization algorithm for neuroimaging features. |

| Machine Learning Library | scikit-learn, nilearn |

Provides standardized implementations of classifiers, regressors, and CV splitters. |

| Site-Aware CV Splitters | GroupShuffleSplit, LeaveOneGroupOut (scikit-learn) |

Enforces correct data splitting by site group to prevent leakage during CVAS. |

| Feature Extraction Suite | FreeSurfer, FSL, SPM, Nipype |

Generates quantitative features (volumes, thickness, connectivity) from raw images. |

| Data Standard Format | Brain Imaging Data Structure (BIDS) | Organizes multi-site data consistently, simplifying pipeline integration. |

| Statistical Platform | R (with lme4, sva packages) |

Used for advanced statistical modeling and validation of harmonization effectiveness. |

| Cloud Computing/Container | Docker, Singularity, Cloud HPC (AWS, GCP) | Ensures computational reproducibility and scalability across research teams. |

Within neuroimaging machine learning research, validating predictive models on temporal or longitudinal data presents unique challenges. Standard cross-validation (CV) violates the temporal order and inherent autocorrelation of such data, leading to over-optimistic performance estimates and non-generalizable models. This document outlines critical cross-validation strategies tailored for time-series and repeated measures data, providing application notes and detailed experimental protocols for implementation in neuroimaging contexts relevant to clinical research and drug development.

The following table summarizes the primary CV strategies, their applications, and key advantages/disadvantages.

Table 1: Comparison of Temporal Cross-Validation Strategies

| Strategy | Description | Appropriate Use Case | Key Advantage | Key Disadvantage |

|---|---|---|---|---|

| Naive Random Split | Random assignment of all timepoints to folds. | Not recommended for temporal data. Benchmark only. | Maximizes data use. | Severe data leakage; over-optimistic estimates. |

| Single-Subject Time-Series CV | For within-subject modeling (e.g., brain-state prediction). | Single-subject neuroimaging time-series (e.g., fMRI, EEG). | Preserves temporal structure for the individual. | Cannot generalize findings to new subjects. |

| Leave-One-Time-Series-Out | Entire time-series of one subject (or block) is held out as test set. | Multi-subject studies with independent temporal blocks/subjects. | No leakage between independent series; realistic for new subjects. | High variance if subject/block count is low. |

| Nested Rolling-Origin CV | Outer loop: final test on latest data. Inner loop: time-series CV on training period for hyperparameter tuning. | Forecasting future states (e.g., disease progression). | Most realistic for clinical forecasting; unbiased hyperparameter tuning. | Computationally intensive; requires substantial data. |

| Grouped (Cluster) CV | Ensures all data from a single subject or experimental session are in the same fold. | Longitudinal repeated measures (e.g., pre/post treatment scans from same patients). | Prevents leakage of within-subject correlations across folds. | Requires careful definition of groups (e.g., subject ID). |

Detailed Experimental Protocols

Protocol 3.1: Implementation of Nested Rolling-Origin Cross-Validation for Prognostic Neuroimaging Biomarkers

Objective: To train and validate a machine learning model that forecasts clinical progression (e.g., cognitive decline) from longitudinal MRI scans.

Materials: Longitudinal neuroimaging dataset with aligned clinical scores for each timepoint, computational environment (Python/R), ML libraries (scikit-learn, nilearn).

Procedure:

- Data Preparation: Align all subject scans to a common template. Extract features (e.g., regional volumetry, connectivity matrices) for each subject at each timepoint (T1, T2...Tn). Arrange data in chronological order globally.

- Define Cutoffs: Set an initial training window size (e.g., data from T1 to Tk) and a testing window (e.g., Tk+1). Define the forecast horizon (e.g., one timepoint ahead).

- Outer Loop (Performance Evaluation):

a. For

iin range(k, total_timepoints - horizon): b. Test Set: Assign data at timei+horizonas the held-out test set. c. Potential Training Pool: All data from timepoints ≤i. - Inner Loop (Hyperparameter Tuning on Training Pool): a. On the training pool, perform a time-series CV (e.g., expanding window) without accessing the future data from the outer loop test set. b. For each inner fold, train the model on an expanding history, validate on the subsequent timepoint(s), and evaluate performance. c. Select the hyperparameters that yield the best average validation score across inner folds.

- Final Model Training & Testing:

a. Train a final model on the entire current training pool (timepoints ≤

i) using the optimized hyperparameters. b. Evaluate this model on the held-out outer test set (timei+horizon). Store the performance metric. - Iteration: Increment

i, effectively rolling the origin forward, and repeat steps 3-5. - Reporting: The final model performance is the average of all scores from the held-out outer test sets. Report mean ± SD of the performance metric (e.g., MAE, RMSE, R²).

Protocol 3.2: Grouped Cross-Validation for Treatment Response Analysis

Objective: To assess the generalizability of a classifier predicting treatment responder status from baseline and follow-up scans, avoiding within-subject data leakage.

Materials: Multimodal neuroimaging data (e.g., pre- and post-treatment fMRI) with subject IDs, treatment response labels.

Procedure:

- Feature Engineering: Calculate delta features (post-treatment minus baseline) for each imaging metric per subject. Alternatively, use both timepoints as separate samples but with a shared subject identifier.

- Define Groups: Assign a unique group identifier for each subject (or for each longitudinal cluster like family or site).

- Stratification: Ensure the distribution of the target variable (e.g., responder/non-responder) is balanced across folds as much as possible, stratified by the group identifier.

- CV Split: Use a

GroupKFoldorLeaveOneGroupOutiterator. ForLeaveOneGroupOut: a. For each unique subject/group ID: b. Test Set: All samples (both timepoints) from that subject. c. Training Set: All samples from all other subjects. d. Train the model on the training set and evaluate on the held-out subject's data. - Aggregation: Aggregate predictions across all left-out subjects. Calculate overall accuracy, sensitivity, specificity, and AUC-ROC. Report confusion matrix and AUC with 95% CI.

Visualization of Methodologies

Diagram 1: Nested Rolling-Origin Cross-Validation Workflow

Diagram 2: Grouped (Leave-One-Subject-Out) CV for Repeated Measures

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Computational Tools & Libraries

| Item/Category | Specific Solution (Example) | Function in Temporal CV Research |

|---|---|---|

| Programming Environment | Python (scikit-learn, pandas, numpy) / R (caret, tidymodels) | Core platform for data manipulation, model implementation, and custom CV splitting. |

| Time-Series CV Iterators | sklearn.model_selection.TimeSeriesSplit, sklearn.model_selection.GroupKFold, sklearn.model_selection.LeaveOneGroupOut |

Provides critical objects for generating temporally valid train/test indices. |

| Specialized Neuroimaging ML | Nilearn (Python), PRONTO (MATLAB) | Offers wrappers for brain data I/O, feature extraction, and CV compatible with 4D neuroimaging data. |

| Hyperparameter Optimization | sklearn.model_selection.GridSearchCV / RandomizedSearchCV (used in inner loops) |

Automates the search for optimal model parameters within the constraints of temporal CV. |

| Performance Metrics | Mean Absolute Error (MAE), Root Mean Squared Error (RMSE) for regression; AUC-ROC for classification. | Quantifies forecast error or discriminative power on held-out temporal data. |

| Data Visualization | Matplotlib, Seaborn, Graphviz | Creates performance trend plots, results diagrams, and workflow visualizations. |

Within a thesis on cross-validation (CV) protocols for neuroimaging machine learning (ML), data splitting is the foundational step that dictates the validity of all subsequent results. The high dimensionality, small sample size (n<

Table 1: Modality Characteristics and Splitting Implications

| Modality | Typical Data Structure | Key Splitting Challenges | Primary Leakage Risks |

|---|---|---|---|

| Morphometric | Voxel-based morphometry (VBM), cortical thickness maps, region-of-interest (ROI) volumes. Single scalar value per feature per subject. | Inter-subject anatomical similarity (e.g., twins, families). Site/scanner effects in multi-center studies. | Splitting related subjects across folds. Not accounting for site effects. |

| Functional (Task/RS-fMRI) | 4D time-series (x,y,z,time). Features are connectivity matrices, ICA components, or time-series summaries. | Temporal autocorrelation within runs. Multiple runs or sessions per subject. Task-block structure. | Splitting timepoints from the same run/session across train and test sets. |

| Diffusion (dMRI) | Derived scalar maps (FA, MD), tractography streamline counts, connectome matrices. | Multi-shell, multi-direction acquisition. Tractography is computationally intensive. Connectomes are inherently sparse. | Leakage in connectome edge weights if tractography is performed on pooled data before splitting. |

Table 2: Recommended Splitting Strategies by Modality

| Splitting Method | Best Suited For | Protocol Section | Key Rationale |

|---|---|---|---|

| Group K-Fold (Stratified) | Morphometric, Single-session fMRI features, dMRI scalars. | 3.1 | Standard approach for independent samples. Stratification preserves class balance. |

| Leave-One-Site-Out | Multi-center studies of any modality. | 3.2 | Provides robust estimate of generalizability across unseen scanners/cohorts. |

| Leave-One-Subject-Out (LOSO) for Repeated Measures | Multi-session or multi-run fMRI. | 3.3 | Ensures all data from one subject is exclusively in test set, preventing within-subject leakage. |

| Nested Temporal Splitting | Longitudinal study designs. | 3.4 | Uses earlier timepoints for training, later for testing, simulating real-world prediction. |

Experimental Protocols

Protocol 3.1: Group K-Fold for Morphometric Data

Application: Cortical thickness analysis in Alzheimer’s Disease (AD) vs. Healthy Control (HC) classification.

- Data Preparation: Process T1-weighted images through a pipeline (e.g., FreeSurfer, CAT12). Extract features (e.g., mean thickness for 68 Desikan-Killiany parcels).

- Subject List: Create a list of unique subject IDs (

N=300: 150 AD, 150 HC). - Stratification: Generate a label vector corresponding to diagnosis.

- Split Generation: Use

StratifiedGroupKFold(scikit-learn) withn_splits=5or10. Provide subject ID as thegroupsargument. This guarantees:- No subject appears in more than one fold.

- The relative class proportions are preserved in each fold.

- Iteration: For each fold

i:- Hold-out Fold

ias the test set. - Remaining K-1 folds constitute the training set. Further split this for internal validation/hyperparameter tuning using a nested CV loop.

- Hold-out Fold

- Validation: Report mean ± standard deviation of performance metrics (e.g., accuracy, AUC) across all K outer test folds.

Protocol 3.2: Leave-One-Site-Out (LOSO) for Multi-Center dMRI Data

Application: Predicting disease status from Fractional Anisotropy (FA) maps across 4 scanners.

- Data Preparation: Perform voxelwise analysis of dMRI data (e.g., using FSL's TBSS). Align all FA images to a common skeleton.

- Site Tagging: Append a site label (

Site_A,Site_B,Site_C,Site_D) to each subject's metadata. - Split Definition: The number of splits equals the number of unique sites.

- Iteration: For each site

S:- Test Set: All subjects (

n=25) from siteS. - Training Set: All subjects (

n=75) from the remaining three sites.

- Test Set: All subjects (

- Model Training & Evaluation: Train the model on the three-site pool. Evaluate on the held-out site

S. This tests scanner invariance. - Aggregation: Collate results from all 4 test sets.

Protocol 3.3: Leave-One-Subject-Out (LOSO) for Task-fMRI

Application: Decoding stimulus category from multi-run task-fMRI data.

- Feature Extraction: For each subject (

N=50), preprocess each run separately. Extract trial-averaged activation patterns (beta maps) for each condition (e.g., faces, houses). - Data Structure: Organize features as a list per subject, containing patterns from all their runs/trials.

- Split Definition: Number of splits = Number of subjects (

N=50). - Iteration: For subject

i:- Test Set: All beta maps from all runs of subject

i. - Training Set: All beta maps from all runs of the remaining

49subjects.

- Test Set: All beta maps from all runs of subject

- Critical Note: This is computationally intensive but is the gold standard for preventing leakage of within-subject temporal or run-specific correlations.

Protocol 3.4: Nested Temporal Splitting for Longitudinal Morphometry

Application: Predicting future clinical score from baseline and year-1 MRI.

- Data Alignment: For each subject, ensure scans are aligned to a common template and features are extracted consistently across timepoints (T0, T1, T2).

- Temporal Split: Designate T2 data as the ultimate external test set. Do not use it for any model development.

- Development Set (T0, T1): Perform a nested CV:

- Outer Loop (Time-based): Train on T0, validate on T1.

- Inner Loop: On the T0 training data, perform standard Group K-Fold to tune hyperparameters.

- Final Evaluation: The best model from the development phase is retrained on all T0+T1 data and evaluated once on the held-out T2 data.

Visualization of Core Splitting Workflows

Title: Decision Workflow for Neuroimaging Data Splitting Strategy

Title: Nested Leave-One-Site-Out Cross-Validation Protocol

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Software & Toolkits for Implementing Splitting Protocols

| Tool/Reagent | Primary Function | Application in Splitting Protocols |

|---|---|---|

| scikit-learn (Python) | Comprehensive ML library. | Provides GroupKFold, StratifiedGroupKFold, LeaveOneGroupOut splitters. Core engine for implementing all custom CV loops. |

| nilearn (Python) | Neuroimaging-specific ML and analysis. | Handles brain data I/O, masking, and connects seamlessly with scikit-learn pipelines for neuroimaging data. |

| NiBabel (Python) | Read/write neuroimaging file formats. | Essential for loading image data (NIfTI) to extract features before splitting. |

| BIDS (Brain Imaging Data Structure) | File organization standard. | Provides consistent subject/session/run labeling, which is critical for defining correct grouping variables (e.g., subject_id, session). |

| fMRIPrep / QSIPrep | Automated preprocessing pipelines. | Generate standardized, quality-controlled data for morphometric, functional, and diffusion modalities, ensuring features are split-ready. |

| CUDA / GPU Acceleration | Parallel computing hardware/API. | Critical for tractography (DSI Studio, MRtrix3) and deep learning models used in conjunction with advanced splitting schemes. |

Application Notes and Protocols

Within the broader thesis on cross-validation (CV) protocols for neuroimaging machine learning research, the integration of specialized toolboxes is paramount for robust, reproducible analysis. This document details protocols for integrating nilearn (Python), scikit-learn (sklearn, Python), and BRANT (MATLAB) to implement neuroimaging-specific CV pipelines, addressing challenges like spatial autocorrelation, confounds, and data size.

Foundational Cross-Validation Protocols for Neuroimaging

Neuroimaging data violates the independent and identically distributed (i.i.d.) assumption of standard CV due to spatial correlation and repeated measures from the same subject. The following protocols are critical:

- Subject-Level (Leave-Subject-Out) CV: The only strictly valid method for generalization to new populations when multiple samples (e.g., scans, trials) come from each subject. Training and test sets contain entirely different subjects.

- Nested CV: An outer loop estimates the model's generalization performance, while an inner loop performs hyperparameter tuning on the training fold. This prevents optimistic bias.

- Confound Regression: Physiological and motion confounds must be regressed from the data within each training fold to prevent data leakage.

Integrated Toolbox Implementation Protocols

Protocol A: Python-Centric Pipeline (nilearn & sklearn)

This protocol is suited for feature extraction from brain images followed by machine learning.

Experimental Workflow:

- Data Preparation: Use nilearn's

NiftiMaskerorMultiNiftiMaskerto load and mask 4D fMRI or 3D sMRI data, applying confound regression and standardization within a CV-aware pattern usingsafe_maskstrategies. - Feature Engineering: Extract region-of-interest (ROI) timeseries means or connectomes using nilearn's

connectomeandregionsmodules. - CV Scheme Definition: Use

sklearn.model_selection.GroupShuffleSplitorLeavePGroupsOutwith subject IDs as groups to enforce subject-level splits. - Nested CV Pipeline: Construct a pipeline (

sklearn.pipeline.Pipeline) integrating scaling, dimensionality reduction (e.g., PCA), and the estimator. UseGridSearchCVorRandomizedSearchCVfor the inner loop. - Evaluation: Run the nested CV on the outer loop, scoring using appropriate metrics (e.g., accuracy, ROC-AUC for classification, R² for regression).

Diagram: Python-Centric Neuroimaging CV Workflow

Protocol B: MATLAB-Centric Pipeline with BRANT

This protocol leverages BRANT for preprocessing and statistical mapping, integrating with MATLAB's Statistics & Machine Learning Toolbox for CV.

Experimental Workflow:

- Batch Preprocessing: Use BRANT's GUI or batch script to perform standardized preprocessing (slice timing, realignment, normalization, smoothing) for the entire cohort.

- First-Level Analysis: Use BRANT to generate subject-level contrast maps (e.g., Beta maps for a condition). These maps become the input features for ML.

- Data Organization: Load all contrast maps into a matrix (Voxels x Subjects). Use subject ID vector for grouping.

- CV Scheme Definition: Use

cvpartitionwith the'Leaveout'or'Kfold'option on subject indices to create splits. - Manual Nested Loop: Program an outer loop over CV partitions. Within each training set, use

crossvalor anothercvpartitionfor inner-loop tuning. - Model Training & Testing: Train a linear model (e.g.,

fitclinearfor classification) on the training set with selected hyperparameters, then test on the held-out subjects.

Diagram: MATLAB/BRANT Neuroimaging CV Pipeline

The Scientist's Toolkit: Key Research Reagent Solutions

| Tool/Solution | Primary Environment | Function in Neuroimaging CV |

|---|---|---|

| Nilearn | Python | Provides high-level functions for neuroimaging data I/O, masking, preprocessing, and connectome extraction. Seamlessly integrates with sklearn for building ML pipelines. |

| Scikit-learn (sklearn) | Python | Offers a unified interface for a vast array of machine learning models, preprocessing scalers, dimensionality reduction techniques, and crucially, cross-validation splitters (e.g., GroupKFold). |

| BRANT | MATLAB/SPM | A batch-processing toolbox for fMRI and VBM preprocessing and statistical analysis. Standardizes the creation of input features (e.g., statistical maps) for ML. |

| Nibabel | Python | The foundational low-level library for reading and writing neuroimaging data formats (NIfTI, etc.) in Python. Underpins nilearn's functionality. |

| SPM12 | MATLAB | A prerequisite for BRANT. Provides the core algorithms for image realignment, normalization, and statistical parametric mapping. |

| Statistics and Machine Learning Toolbox | MATLAB | Provides CV partitioning functions (cvpartition), model fitting functions (fitclinear, fitrlinear), and hyperparameter optimization routines. |

| NumPy/SciPy | Python | Essential for numerical operations and linear algebra required for custom metric calculation and data manipulation within CV loops. |

Table 1: Comparison of Integrated Toolbox Protocols for Neuroimaging CV

| Aspect | Python-Centric (Nilearn/sklearn) | MATLAB-Centric (BRANT) |

|---|---|---|

| Core Strengths | High integration, modularity, vast ML library, strong open-source community, easier version control. | Familiar environment for neuroimagers, tight integration with SPM, comprehensive GUI for preprocessing. |

| CV Implementation | Native, streamlined via sklearn.model_selection. Nested CV is straightforward. |

Requires manual loop programming. CV logic must be explicitly coded around cvpartition. |

| Data Leakage Prevention | Built-in patterns (e.g., Pipeline with NiftiMasker) facilitate safe confound regression per fold. |

Researcher must manually ensure all preprocessing steps (beyond BRANT) are applied within each CV fold. |

| Scalability | Excellent for large datasets and complex, non-linear models (e.g., SVMs, ensemble methods). | Can be slower for large-scale hyperparameter tuning and less flexible for advanced ML models. |

| Primary Use Case | End-to-end ML research pipelines, from raw/images to final model, favoring modern Python ecosystems. | Leveraging existing SPM/BRANT preprocessing pipelines, integrating ML into traditional fMRI analysis workflows. |

| Barrier to Entry | Requires Python proficiency. Environment setup can be complex. | Lower for researchers already embedded in the MATLAB/SPM ecosystem. |

Debugging and Refining: Solving Common CV Pitfalls and Optimizing Model Robustness

Data leakage is a critical, often subtle, failure mode that invalidates cross-validation (CV) protocols in neuroimaging machine learning (ML). This document provides application notes and protocols for diagnosing and preventing leakage during feature selection and preprocessing, a core pillar of a robust neuroimaging ML thesis. Leakage artificially inflates performance estimates, leading to non-reproducible findings and failed translational efforts in clinical neuroscience and drug development.

Table 1: Prevalence and Performance Inflation of Common Leakage Types in Neuroimaging ML Studies

| Leakage Type | Estimated Prevalence in Literature* | Average Observed Inflation of Accuracy (AUC/%)* | Typical CV Protocol Where It Occurs |

|---|---|---|---|

| Preprocessing with Global Statistics | High (~35%) | 8-15% | Naive K-Fold, Leave-One-Subject-Out (LOSO) without nesting |

| Feature Selection on Full Dataset | Very High (~50%) | 15-25% | All common protocols if not nested |

| Temporal Leakage (fMRI/sEEG) | Moderate (~20%) | 10-20% | Standard K-Fold on serially correlated data |

| Site/Scanner Effect Leakage | High in multi-site studies (~40%) | 5-12% | Random splitting of multi-site data |

| Augmentation Leakage | Emerging Issue (~15%) | 3-10% | Applying augmentation before train-test split |

*Synthetic data based on review of methodological critiques from 2020-2024.

Table 2: Performance of Leakage-Prevention Protocols

| Prevention Protocol | Relative Computational Cost | Typical Reduction in Inflated Accuracy | Recommended Use Case |

|---|---|---|---|

| Nested (Double) Cross-Validation | High (2-5x) | Returns estimate to unbiased baseline | Final model evaluation, small-N studies |

| Strict Subject-Level Splitting | Low | Eliminates subject-specific leakage | All neuroimaging studies |

| Group-Based Splitting (e.g., by site) | Low-Moderate | Eliminates site/scanner leakage | Multi-center trials, consortium data |

| Blocked/Time-Series Aware CV | Moderate | Mitigates temporal autocorrelation leakage | Resting-state fMRI, longitudinal studies |

| Preprocessing Recalculation per Fold | High (3-10x) | Eliminates preprocessing leakage | Studies with intensive normalization/denoising |

Experimental Protocols

Protocol 3.1: Nested Cross-Validation for Feature Selection

Objective: To obtain an unbiased performance estimate when feature selection or hyperparameter tuning is required. Materials: Neuroimaging dataset (e.g., structural MRI features), ML library (e.g., scikit-learn, nilearn). Procedure:

- Outer Loop: Partition data into K outer folds. For k=1 to K: a. Designate fold k as the outer test set. The remaining K-1 folds constitute the outer training set. b. Inner Loop: Partition the outer training set into L inner folds. c. Perform feature selection/hyperparameter tuning only on the inner folds. Use techniques like ANOVA F-test, recursive feature elimination (RFE), or LASSO, training on L-1 inner folds and validating on the held-out inner fold. Repeat for all L inner folds. d. Identify the optimal feature set/hyperparameters based on average inner-loop performance. e. Critical Step: Using only the outer training set, re-train a model with the optimal feature set/hyperparameters. f. Evaluate this final model on the outer test set (fold k), which has never been used for selection or tuning.

- The final performance is the average across all K outer test folds.

Protocol 3.2: Subject-Level & Group-Level Data Splitting

Objective: Prevent leakage of subject-specific or site-specific information. Materials: Dataset with subject and site/scanner metadata. Procedure:

- Subject-Level: Before any preprocessing, generate a list of unique subject IDs. Perform all splitting (train/validation/test) based on these IDs. All data (e.g., multiple sessions, runs) from a single subject must reside in only one split.

- Group-Level (for Multi-Site Data): a. Identify the grouping variable (e.g., scanner site, study cohort). b. For a robust hold-out test set, hold out all data from one or more entire sites. c. For cross-validation, perform splits such that all data from a given site is contained within a single fold (e.g., "Leave-One-Site-Out" CV).

Protocol 3.3: Preprocessing Without Leakage

Objective: Calculate preprocessing parameters (e.g., mean, variance, PCA components) without using future test data. Materials: Raw neuroimaging data, preprocessing pipelines (e.g., fMRIPrep, SPM, custom scripts). Procedure:

- After performing subject/group-level splits, apply preprocessing independently to each data split.

- For the training set, fit all preprocessing transformers (e.g., a

StandardScaler). - Critical Step: Use the parameters from the training set fit (e.g., mean and standard deviation) to transform both the training and the held-out test/validation sets.

- Never fit a preprocessing transformer (normalization, imputation, smoothing kernel size optimization) on the combined dataset.

Mandatory Visualizations

Diagram 1: Nested vs. Non-Nested CV for Feature Selection

Diagram 2: Leakage-Prone vs. Correct Preprocessing

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Leakage-Prevention in Neuroimaging ML

| Item / Solution | Function / Purpose | Example Implementations |

|---|---|---|

| Nested CV Software | Automates the complex double-loop validation, ensuring correct data flow. | scikit-learn Pipeline + GridSearchCV with custom CV splitters; niLearn NestedGridSearch. |

| Subject/Group-Aware Splitters | Enforces splitting at the level of independent experimental units. | scikit-learn GroupKFold, LeaveOneGroupOut; custom splitters for longitudinal data. |

| Pipeline Containers | Encapsulates and sequences preprocessing, feature selection, and model training to prevent fitting on test data. | scikit-learn Pipeline & ColumnTransformer. |

| Data Version Control (DVC) | Tracks exact dataset splits, preprocessing code, and parameters to ensure reproducibility of the data flow. | DVC (Open-Source), Pachyderm. |

| Leakage Detection Audits | Statistical and ML-based checks to identify potential contamination in final models. | Permutation tests on feature importance, comparing train/test distributions (KS-test), sklearn-intelex diagnostics. |

| Domain-Specific CV Splitters | Handles structured neuroimaging data (time series, connectomes, multi-site). | nilearn connectome modules, pmdarima RollingForecastCV for time-series. |

Within the broader thesis on cross-validation (CV) protocols for neuroimaging machine learning research, the small-n-large-p problem presents the central methodological challenge. Neuroimaging datasets routinely feature thousands to millions of voxels/features (p) from a limited number of participants (n). Standard CV protocols fail, yielding optimistically biased, high-variance performance estimates and unstable feature selection. This document outlines applied strategies and protocols to produce generalizable, reproducible models under these constraints.

Core CV Strategies & Comparative Data

Table 1: Comparative Analysis of CV Strategies for Small-n-Large-p

| Strategy | Key Mechanism | Advantages | Disadvantages | Typical Use Case |

|---|---|---|---|---|

| Nested CV | Outer loop: performance estimation. Inner loop: model/hyperparameter optimization. | Unbiased performance estimate; prevents data leakage. | Computationally intensive; complex implementation. | Final model evaluation & reporting. |